Social-Cognitive Bias & Machine Learning

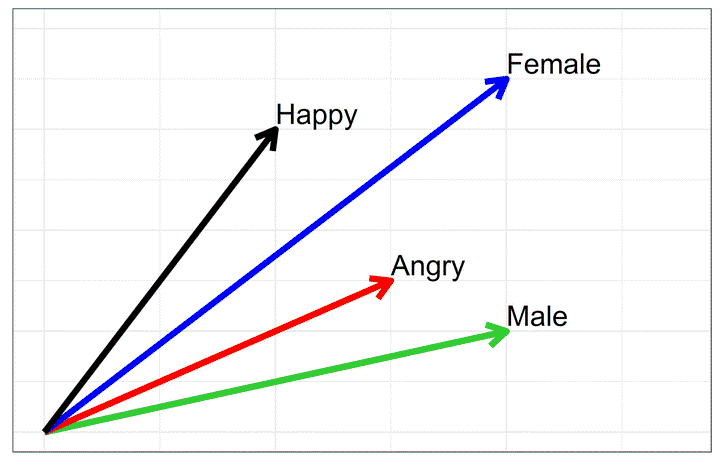

AI models reflect the content of human biases.

AI models reflect the content of human biases.

We study how AI language and computer vision models reflect human biases. Developing projects examine stereotype content (both for single and intersectional identities) in archival data (books, news articles, social media). In addition, we use state-of-the-art face recognition models to generate realistic face photographs to study human biases in face impressions.